Federated Learning Meets Blockchain: Inside FLock's Decentralized AI Network

Exploring how FLock combines Federated Learning with blockchain-based incentive mechanisms to replace centralized AI systems.

Decentralized AI is beginning to cross the chasm. The United Nations Development Programme (UNDP) has long advocated for the sustainable use of AI to empower developing nations and underserved communities as part of achieving its Sustainable Development Goals (SDG). Earlier this week, the UNDP selected FLock - a decentralized AI network - as its strategic AI partner. Why does this all matter, and how does FLock’s decentralized approach address challenges that centralized AI cannot?

AI Is Inevitable

The role of AI continues to grow in our day to day lives. Millions of people are using AI-powered applications like ChatGPT. Enterprises are pouring hundreds billions into developing the infrastructure, training, and integration of AI in their workflows. Governments are actively implementing AI solutions for public operational efficiency with AI.

This is all for good reason: AI stands to unlock a new level of productivity gains not yet seen. It’s already leading to groundbreaking applications across various fields of scientific research. People are automating busy work, leading to more creative initiatives and experiments. A new generation of goods and services is actively being transformed from natural language into existence, what has come to be known as “vibe-coding”. McKinsey projects $4.4 trillion in productivity gains from AI adoption, while Goldman Sachs forecasts up to 7% increase in global GDP by 2030.

There is a caveat though: the AI models we know and use today are black boxes: closed-source LLMs developed and maintained by central, for-profit entities. These entities control how the AI models are developed and trained, and determine the terms and means by which they can be accessed and used. At a glance, these are resourceful, legally compliant companies with a strong economic incentive to keep improving these AI models and listening to their customers’ feedback and requirements. What exactly then is the concern?

Why Not Just Use Centralized AI?

People have been waking up to the dangerous realities of social media algorithms, realizing the extent to which these black boxes maintained by for-profit entities are impacting the way they think and behave. The implications with AI are significantly greater.

Binji’s AI therapist tweet offers a more facetious example to reason about, but it speaks to the concerning implications of speaking to black boxes which operate in an unknown manner behind the scenes. But a shift away from centralized AI is not about realizing utopian cypherpunk ideals: there are legitimate concerns and risks at the individual, enterprise, and national levels:

Single point of failure: Centralized AI introduces a single point of failure for its users. First, this poses an operational risk, if these facilities go offline due to outages, attacks, or natural disasters. This is not uncommon. Second, there are substantial security concerns, including the risk of leaked user prompts, chat histories, and sensitive data with single-failure points. Such breaches at AI companies could expose everyday user information and become national security liabilities when government or sensitive data is involved.

Data Protection: Centralized AI apps need to store and maintain user data to improve models and provide personalized experiences. Users are providing LLMs with all kinds of data about themselves, including sensitive and personal information. The concern here is twofold: that data is at risk of being breached. Additionally, the risk is that AI companies monetize user data the same way social media and internet companies have historically monetized their users.

Value Plurality and Model Output Bias: Model outputs are at risk of bias towards a single entity’s values and perspectives. The Google Gemini incident controversy, though a mostly isolated example, highlighted the potential risks of models providing misinformation to its users.

Scalability: Processing power limited to a single entity creates potential bottlenecks over time. Network delays, capacity constraints, and overprovisioning challenges can arise as usage scales, and the entire network runs on the assumption that the centralized AI provider alone has enough processing power and capacity to handle it all.

Beyond this, innovation is simply stifled if a limited number of people or entities can experiment and develop new models. Most of the internet’s infrastructure runs on Linux, an open-source operating system that empowers developers worldwide to innovate and adapt freely. Wikipedia, a freely editable and collaboratively maintained knowledge base, serves as a gateway to information for billions of people globally. In the world of computing and software, open source technology movements have repeatedly shown that collaborative development can produce superior, more resilient solutions than closed-source alternatives.

The UNDP: Using AI As A Transformative Force For Good

AI is technology powerful enough to change the world for good. What may be mere quality-of-life improvements in one country can be transformation 0:1 improvements in others. et centralized AI inherently conflicts with its potential as a public good.

Developed in 1965, the UNDP was formed to help eradicate poverty and reduce inequalities worldwide through sustainable development. For years now, The UNDP has been advocating for embracing AI as a transformative force for good. Specifically, the UNDP believes that AI can help accelerate its mission.

The key challenges they are concerned with include:

Digital public infrastructure: Developing nations may not be able to afford to build Google/OpenAI-scale systems. At the same time, they do not want to be dependent on AI developed by other nations like the US or China.

Nation-specific needs: Nations need AI solutions tailored to their specific needs and scale, not settle for cookie-cutter general purpose solutions.

Data sovereignty: Nations need to protect their citizens’ data, especially across healthcare, agriculture, and other sensitive and personal data.

Just earlier this week, the UNDP announced a new strategic AI partner. It wasn’t OpenAI, Anthropic, or Google, but a new decentralized alternative: FLock.

Introducing FLock

FLock, short for Federated machine learning on blockchain, is a decentralized AI network. Instead of a single entity, it enables anyone around the world to contribute models, data, and computing resources and participate in an open, collaborative environment for decentralized AI training. To do this, FLock combines conventional and Federated Learning AI model training with automated blockchain-based reward distribution.

Altogether, this creates an open marketplace of app-specific models which are community owned, built, and led.

How It All Works

To better understand FLock’s approach, it helps to first understand Federated Learning.

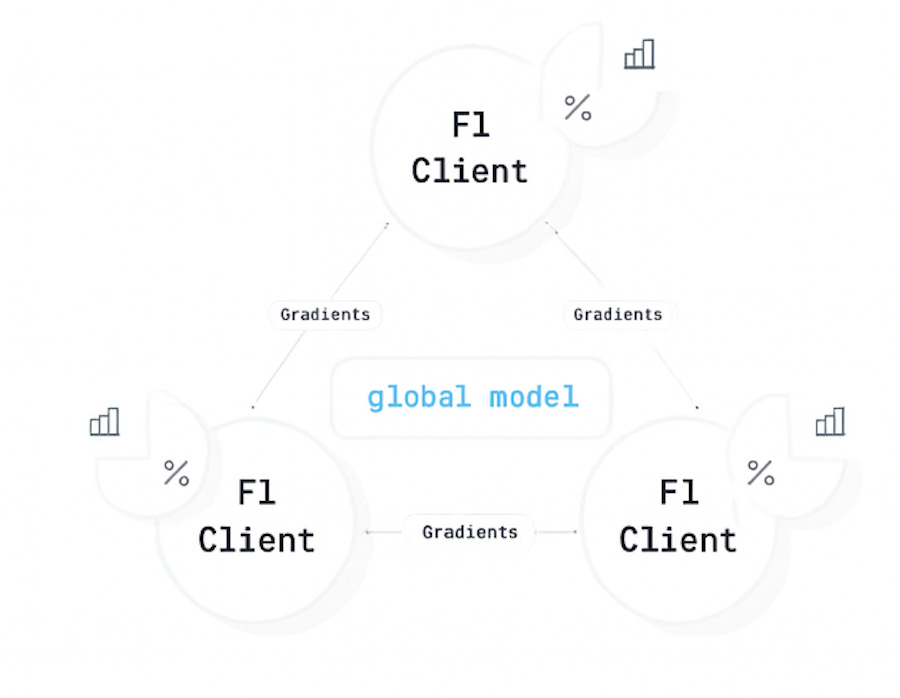

Federated Learning, or federated Machine Learning, is a collaborative machine learning technique where multiple participating clients can collaboratively train a global model without exposing any raw data. This approach is designed to improve output quality by enabling AI models to be trained on wider and more diverse data sources, while protecting the privacy of participants’ data.

Federated learning typically involves a central hub server on which the global model lives and is redistributed for local training. Participating clients then train the global model using their local data and only exchange their gradients, the weights and parameters used in their local training, back to the hub server. The hub server then aggregates these gradients to update the global model, and the process is repeated until the training is complete.

Federated learning has proven to be an effective solution to addressing user privacy within AI training, there is a catch with this set up, though. To start, the central hub server, on which the global model lives, is still a single point of failure. Should it stop working for whatever reason, the whole learning stops. Additionally, federated learning still assumes the data clients provide is honest. In other words, it doesn’t address the risk of data poisoning, a technique in which clients provide inauthentic data targeted at diminishing the quality of a model’s training and therefore its output. This is becoming a growing area of concern as more of today’s world becomes reliant on AI models, especially in fields like healthcare.

FLock aims to address these problems by implementing cryptographic proof and economic incentives enforced onchain. So how exactly does that work?

FLock System Architecture

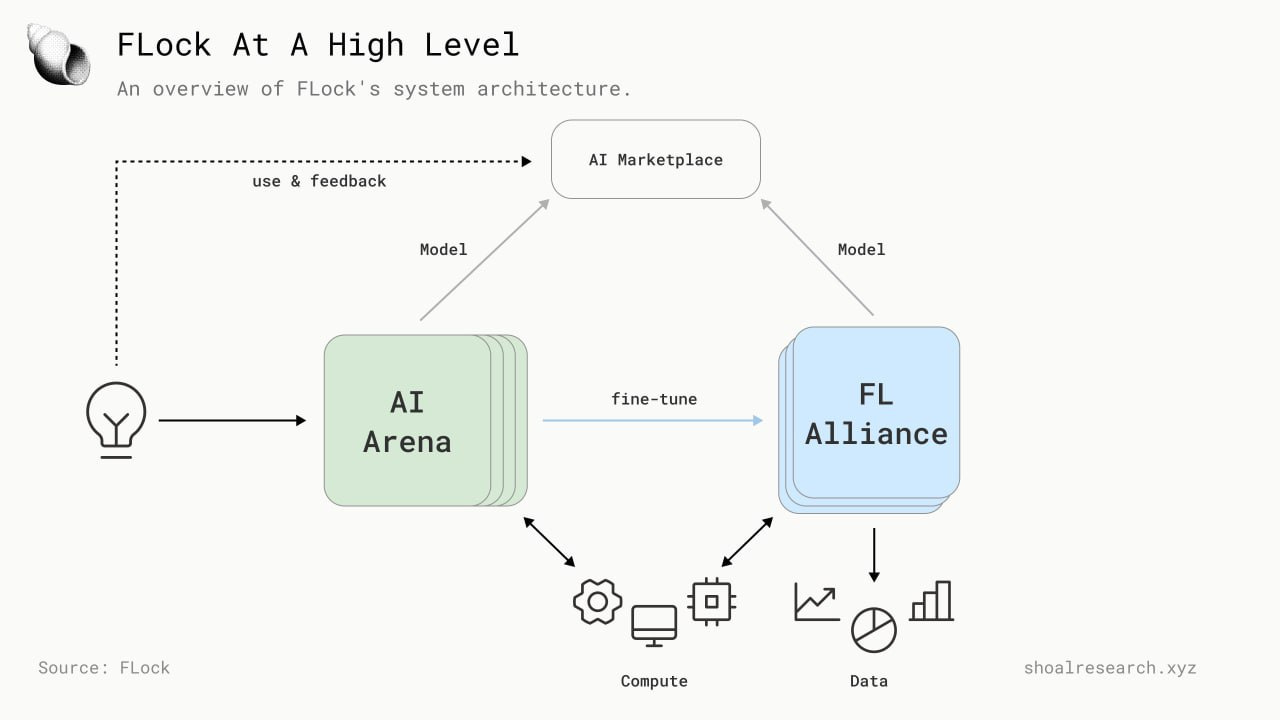

FLock consists of three interconnected systems that form its decentralized AI ecosystem:

The AI Arena: A decentralized training platform where developers submit models that compete on public datasets. These models are evaluated by a distributed network of validators, with an important feature: validators cannot identify which model came from which trainer, preventing collusion. High-performing models earn rewards while underperforming ones can be refined and resubmitted. This competitive process produces models optimized for real-world performance, with participation requiring token stakes to ensure accountability.

The FL Alliance: FLock’s Federated Learning client that enables training on sensitive private data without exposing it. The system randomly assigns participants as either proposers (who train models locally) or voters (who evaluate results). When a majority of voters approve a model update, proposers receive rewards. Those who submit corrupted data or false validations lose their stake.

The AI Marketplace: Successful models from Arena and Alliance are deployed in the Marketplace for production use, with version control, performance monitoring, and automated updates. Model hosts provide infrastructure and set pricing, while users pay for API access beyond free tier limits. Higher-quality models attract more users, generating revenue for creators and hosts - creating market incentives for continuous improvement.

Altogether, models begin in the Arena with public data training, undergo refinement in the Alliance with private data, then reach production in the Marketplace. Usage data feeds back to improve subsequent training cycles. The key innovation lies in how FLock applies onchain mechanisms to coordinate incentives among network participants.

The Onchain Layer

FLock’s key innovation is using blockchain to solve the lack of coordination among participants in federated learning. FLOCK, a token that lives on the Base L2, serves as the key mechanism that aligns incentives across the network.

Proof of Stake

FLock implements a robust incentive mechanism to incentivize honest behavior (and penalize malicious behavior at that) among its federated learning participants. This mechanism, based on a research paper published by the FLock team, utilizes a proof-of-stake system in which participants stake FLOCK tokens to participate. Malicious behavior, i.e. data poisoning, risks real economic value, while honest contributions earn rewards. Rewards are distributed in FLOCK, proportional to stake size and performance quality.

There are three ways to participate:

Training node operators: stake tokens to compete in model training tasks.

Validators: stake to evaluate and score submitted models

Delegators: delegate stake to [training nodes], earn rewards accordingly.

Smart Contract Orchestration

FLock uses a set of smart contracts to orchestrate the training and validation process. When tasks are created, the smart contracts are responsible for:

Selecting participants using stake-weighted randomness to prevent gaming

Distributing rewards based on aggregated validation scores

Slashing stakes of participants who submit poisoned data or false validations

Managing reward distribution between operators and delegators

More on FLOCK

Live on the Base L2, the FLOCK token serves three functions within the ecosystem:

Utility: FLOCK is staked across different roles, with larger stakes increasing selection probability for training and validation tasks. Task creators can pay additional FLOCK as bounties to expedite their training processes. The system implements time-based multipliers: longer staking periods (up to 365 days) generate gmFLOCK (governance-weighted FLOCK), increasing influence and reward shares from network emissions.

Payment: Users access models trained in AI Arena and FL Alliance with rate limits based on stake amounts, paying in FLOCK for usage beyond these limits. Model hosts stake FLOCK to serve models and set custom pricing structures.

Governance: FLOCK holders participate in DAO governance, voting on task verification (determining which tasks qualify for rewards), parameter adjustments (reward distributions, slashing rates), and protocol upgrades.

Use Cases and Applications

FLock is already being actively deployed across numerous real-world applications where deep, app-specific contextual knowledge, strict privacy guarantees, and decentralization matters:

Specialized Blockchain Development: The Aptos Move LLM

FLock partnered with the Aptos Foundation to develop an LLM that outperforms ChatGPT-4o in generating Move-specific code, from simple "Hello World" scripts to complex programs like yield tokenization with AMM trading functionality. The model was trained specifically on community-contributed Move code datasets, taking advantage of FLock's decentralized training infrastructure.

Initial testing demonstrated superior performance compared to ChatGPT-4o, with greater accuracy and adherence to Aptos-specific requirements. The model, built on top of DeepSeek's foundation and available on Hugging Face, achieves ChatGPT-4-level performance specifically for Move programming.

Community-Specific AI Assistants

FLock enables tailored AI assistants that understand specific contexts:

FarcasterGPT: Trained on Farcaster protocol data to understand its unique social dynamics, terminology, and user patterns

ScrollGPT: Optimized for the Scroll L2 ecosystem, understanding its technical documentation, governance proposals, and developer patterns

DAO-specific models: Custom assistants that understand individual DAOs' governance structures, historical decisions, and community norms

Privacy-Preserving Healthcare Applications

FLock enables healthcare providers to collaborate on diabetes prediction models using linear regression, where each participant retains control over their data while computing model updates locally. Multiple hospitals can train on their local patient records, contributing only mathematical gradients to improve the global model.

This approach is crucial for complying with stringent health data protection regulations such as HIPAA, as patient data never leaves the institution. By integrating data from diverse demographics and geographical locations without sharing raw data, FLock helps develop more accurate and generalized models that account for population variations.

Decentralized Compute Integration: FLock x Akash

FLock has integrated with Akash Network to create one-click deployment templates for running FLock validators and training nodes on decentralized compute infrastructure. This partnership exemplifies the composability of decentralized systems as users can now:

Deploy FLock validators on Akash with minimal configuration

Access GPU resources through Akash's marketplace

Run training nodes using Docker containers with customizable hardware specifications

Pay for compute using AKT tokens while earning FLOCK for validation

The integration includes pre-configured templates requiring only an API key and task ID, making decentralized AI training accessible to non-technical users.

Natural Language Database Access: Text2SQL

FLock's Text2SQL model enables users to query databases using natural language commands, simplifying SQL syntax. Developed through AI Arena's competitive training process, the model was trained on extensively cleaned and manually reviewed open-source datasets.

The Path Forward

AI represents the next foundational layer of human-computer interaction. However, the practical concerns around centralized AI are becoming harder and harder to ignore. Single points of failure could cripple nations. Data sovereignty violations could compromise millions. Innovation bottlenecks stand to largely favor incumbent interests.

At Shoal Research, we believe this layer must be built just like the internet was: as an open, collaborative effort rather than a corporate, centralized service. We’re excited to see this frontier push forward, and think FLock’s approach to combine Federated Learning with onchain incentive mechanisms and cryptography offers an innovative solution here. Being selected by the UNDP, FLock will mentor five pilot projects across climate finance, inclusive energy, and social protection, proving decentralized AI can address humanity's most complex challenges.

Time will tell how the outcome plays out, but FLock is well-positioned to thrive in a world that embraces AI as a public good and decentralized AI networks as the approach to get there.

References

United Nations Development Programme. (n.d.). Sustainable Development Goals. UNDP. https://www.undp.org/sustainable-development-goals

FLock. (n.d.). Documentation. FLock Docs. https://docs.flock.io/

FLock. (n.d.). Whitepaper. FLock. https://www.flock.io/whitepaper

FLock. (2022). FLock: Federated learning on blockchain. arXiv. https://arxiv.org/abs/2211.04344

FLock. (2024). Decentralized federated learning: Incentives and mechanisms. ACM Digital Library. https://dl.acm.org/doi/10.1145/3701716.3715484

FLock. (2024). Blockchain-enabled federated learning: Privacy and trust in decentralized AI. IEEE Xplore. https://ieeexplore.ieee.org/abstract/document/10471193

Not financial or tax advice. The purpose of this post is purely educational and should not be considered as investment advice, legal advice, a request to buy or sell any assets, or a suggestion to make any financial decisions. It is not a substitute for tax advice. Please consult with your accountant and conduct your own research.

Disclosures. All posts are the author's own, not the views of their employer. This post has been created in collaboration with the FLock team. At Shoal Research, we aim to ensure all content is objective and independent. Our internal review processes uphold the highest standards of integrity, and all potential conflicts of interest are disclosed and rigorously managed to maintain the credibility and impartiality of our research.