zkTLS: Verifiable Data Composability

zkTLS integrates zero-knowledge proofs into TLS, enabling secure, private data verification between Web2 and Web3.

Web Proofs and the Internet of Tomorrow

Today’s internet is a mosaic of walled gardens, each governed by centralized authorities that dictate standards and control interoperability. Users are compelled to share personal data with these platforms, which may then be handed over to governing bodies or third parties, often without full transparency or user consent. While this model functions—albeit imperfectly—in sectors like finance, it poses significant challenges for social media and the internet at large.

One of the most pressing issues is the ease with which operators can sever integrations with affiliated services or competitors, effectively locking users into their ecosystems. We've witnessed this repeatedly: Facebook (now Meta) restricting data access to integrated partners or Twitter placing API access behind a paywall. Such actions not only stifle competition but also limit user autonomy and expose personal data to risks like theft and loss, especially in the event of targeted cyberattacks.

But what if there were a way to dismantle these barriers without compromising security or functionality? Enter zero-knowledge Transport Layer Security (zkTLS)—a revolutionary approach that integrates zero-knowledge proofs into the foundational protocols of the web. zkTLS offers a path to liberate users from the retention techniques employed by big tech, mitigating user lock-in while enhancing privacy and security.

In this paper, we delve into the concept of web proofs through the lens of zkTLS. We'll explore how this innovative technology can reshape the internet by enabling secure, private interactions without the need to divulge unnecessary personal information. By leveraging zkTLS, we can pave the way for an internet that is not only more open and interoperable but also more aligned with the needs and rights of individual users.

Join us as we unpack the potential of zkTLS to transform the digital landscape, fostering an internet built on trust, freedom, and user empowerment. It's time to shine a light on the technologies that can redefine our online experience for the better.

The AGI advent and autonomous agents on social media

The recent rise of artificial intelligence, mostly via large language models (LLMs) and generating images with large language models (GILL), has exacerbated these issues. The technology is extensively trained to be as human-like as possible and so needs access to a huge amount of data to learn the “skill” of being human in the hopes of eventually attaining sentience and producing its thoughts.

The training models of such technologies take on a “learn by observation and regurgitation” model, exposing them to observable human interaction in every possible context; and what better venue is there for gaining insight into random people's personal lives and interactions apart from social media?

User data has been greatly harvested across every major channel and funneled into the training models for our AI overlords, fuelling the rapid rise of their offerings. This scraping strategy is understandably countered by social media companies, who may either be competitors or would prefer to be paid for access to user data. Thus, data is now a costly commodity and will likely continue to be so, especially as the internet and social media return to niche, curated communes with high trust levels.

The Web3 Lens

Digital assets and blockchain technology have innovated greatly, but mostly in a siloed and self-referential manner, building systems that are mostly independent of traditional systems and values.

The relevant value proposition in this context is that blockchain technology enables the immutability of data, and the underlying ethos is such that systems built atop them replicate their openness. As such, anyone can access any data that is available onchain, without the data's owner having to “permit” them.

This observation shows how greatly different crypto is from both traditional systems and the AI hype — it goes all the way out to propose the openness of every data for everyone permissionlessly.

However, the opposition of their underlying ideologies doesn't negate the fact that:

They each come with their benefits and downsides,

each system's benefits can, in most cases, be used to enhance the other's features,

If we're serious about “onboarding the next billion” to crypto, then it is a trivial assumption with nontrivial implications that the user base of blockchains is a subset of that of legacy institutions.

The inability/refusal of legacy systems to take advantage of crypto innovations to enhance their performance shouldn't (and doesn't) prevent crypto from taking advantage of their reliable components. The most notable of such components is legacy systems’ brimming vat of user behavioral data gathered over decades. User data is sold to advertisers for revenue and is often stolen in every other data breach.

This also applies to crypto + AI; while this intersection is still mostly saturated with undeniable cash grabs and buzz words primed for the next raise at dizzying valuations, there's still some exploration to be done.

Personal data through the lenses of crypto

Entry into crypto faces a cold start problem, as (apart from centralized exchanges) there is no widely known means for the average user to gain access/exposure to blockchain technology. The average entrant to crypto has to create an entirely new identity (in most cases a wallet address), then go about finding a way to fund it to access the services of decentralized applications.

Allowing a user’s identity in legacy systems to persist onchain, whether fully or partially (if the user wishes) will essentially unlock the next wave of innovation in DeFi beyond speculation and musical chairs. This is the stated goal of web proofs and “zkTLS”: enabling portability of offchain user data, with provable provenance and privacy guarantees, so that users can easily access the offerings of onchain environments in a more trustless manner than is currently possible.

Data use is typically restricted at its point of origin, thus disabling the portability of user data, which is invariably one of the reasons for the identity diversion problem faced by crypto users. Web proofs propose to solve this by enabling the users to act as an oracle of sorts between legacy and crypto institutions, and even between legacy institutions.

Below, we will begin with an overview of the traditional TLS scheme.

Web proofs and the TLS problem

The transport layer security is a cryptographic protocol that provides privacy and data integrity guarantees between communicating parties over a computer network. The parties are usually a client (referred to as a prover henceforth for consistency) who sends requests to a server; while the server sends back a response to the prover's request.

TLS generally consists of two sub-protocols:

The handshake protocol- is responsible for establishing a shared communication channel between the user (also the prover) and the data source (also the server).

This protocol employs asymmetric cryptographic schemes to allow the server and prover to come to an agreement on which cipher suite to use for data transmission. They then authenticate each other and securely compute a shared secret key to be used in the second phase of the session.

The record protocol- is responsible for transmitting data while maintaining integrity (and optionally, confidentiality), using symmetric cryptographic schemes.

In this phase, the prover's requested data is fragmented into fixed-size plaintext records, which may be padded and compressed to decrease transmission costs. These records are then tagged with a message authentication code (MAC), encrypted, and the result is transmitted to the prover. Upon reception, the data is decrypted and verified using the prover's keys, the contents are decompressed, reassembled, and then delivered to higher-level protocols.

This is a generalization of the process, as it varies by version and authentication schemes. However, in the setup, only the prover and server can communicate with and understand each other. This means that the data passed to the prover is unusable in any other context, as they cannot prove its source. Thus, the use of a web proof obtained using the results of a traditional TLS session doesn't prove anything, especially since:

the proof was constructed using messages from a specific server,

the message wasn't altered by the prover after it received it from the server.

The widespread adoption of the TLS protocol enables users to access their data, private or otherwise, over channels with provable end-to-end confidentiality. The strict confidentiality of the protocol means that a user's data is available to only them, and whoever else the data source chooses to share it with.

This is effectively a form of user lock-in, as users cannot access and export their data as need be, they'd almost always have to get explicit permission from the data source to be able to export their data for even the simplest purposes.

This can be solved by introducing a third party who verifies that the prover doesn't alter the server's response, amongst other desirable properties. This family of protocols which are being pioneered by crypto portcos to enable data provenance are (erroneously) referred to as zkTLS (zero-knowledge transport layer security) protocols.

What is zkTLS?

The crypto ecosystem has long been separated from the traditional "Web2" world due to technical constraints that prevent seamless interaction and composability. This is primarily because the underlying infrastructures are not directly interoperable. The inability of these two ecosystems to share data has led to significant fragmentation, which is difficult to overcome since most “Web3” systems lack the means to verify data from the “Web2” ecosystem. This isolationist design within the Web3 ecosystem is not a feature but rather a limitation. Therefore, a solution is needed to establish a connection and foster integration between these ecosystems.

In the Web2 world, verification of data is limited because HTTPS only ensures secure transmission between the user and the website, but does have a means for third parties to independently verify the authenticity of the data. Web3, which operates on decentralized blockchain technology, solves this problem by ensuring data is stored on a transparent, immutable ledger. In this system, anyone can independently verify the authenticity of the data without needing to rely on a central authority or the source due to the advent of the zero-knowledge proof technology which makes it possible to share data and the data be independently verified by anyone in the network.

zkTLS(Zero-Knowledge Transport Layer Security) is a protocol that creates a gateway between private Web2 data and the Web3 ecosystem. zkTLS is a hybrid protocol that integrates the zero-knowledge proofs with the TLS encryption system, allowing for secure data transmission between the Web2 and Web3 ecosystems while maintaining privacy. It allows users to securely export data from any website without the risk of leaking unnecessary data. zkTLS integrates two technologies: TLS (Transport Layer Security), a cryptographic protocol that serves as the backbone of secure web communication over the internet. It is mostly used in secure browsing, where data is used to encrypt and protect data transmitted between browsers and web servers. On the other hand, ZK (zero-knowledge proofs) allow a prover to prove the validity of a piece of information to a verifier without revealing the actual content of the information to the verifier.

This combination ensures that while data remains encrypted during transmission, it can also be verifiable without the exposure of sensitive details. This is especially valuable in decentralized applications (dApps), where privacy and security are of great importance.

How does zkTLS work?

zkTLS enhances the standard TLS protocol by adding a verifiable privacy layer through Zero-Knowledge Proofs. Firstly, TLS encrypts communication to prevent eavesdropping, and zkTLS enables selective data disclosure. Then zero-knowledge proofs are created and shared with the verifier. These proofs allow the verifier to validate certain facts (e.g., that a transaction meets specific requirements) without exposing sensitive data like account balances or personal details. This balance between security and privacy is what makes zkTLS unique. One of the main components of zkTLS is its use of Multi-Party Computation (MPC) setups, where multiple parties cooperate to encrypt and decrypt data without any single party having access to the full encryption key. This ensures that no tampering can occur during the data transmission, adding an extra layer of trust to the process.

Understanding the Applications

With its improved privacy, security, and user reach, zkTLS is transforming the way blockchain ecosystems can communicate with off-chain data. For instance, decentralized financial systems and token airdrops have historically been restricted by on-chain activity such as on-chain history transactions, swaps, or bridges, which restricts possible users to individuals who are already active in the cryptocurrency space. zkTLS expands these boundaries by enabling the use of off-chain activities, like streaming music on platforms such as Spotify, purchasing items from Amazon, or even participating in ridesharing, as criteria for distributing tokens. This integration increases the pool of potential token recipients and allows projects to capture data on real-world activities in a privacy-preserving manner. By leveraging zkTLS, airdrops can reward verified, genuine users, fostering a larger, more diverse community while preventing fraud.

In DeFi lending, zkTLS facilitates trust and transparency by enabling secure verification of off-chain actions, such as income verification or contributions to open-source projects. This can influence on-chain rewards without exposing sensitive financial data. Unlike traditional oracles that may lack privacy and scalability, zkTLS-powered oracles can provide tamper-proof, verifiable data, such as price feeds or other market metrics, without revealing the source or content of the data, making them suitable for privacy-conscious applications in DeFi. This has the potential to transform lending by supporting undercollateralized loans, allowing users to prove income without disclosing full financial histories, thus unlocking more inclusive lending opportunities while upholding data privacy.

By guaranteeing safe and authenticated asset transfers between blockchain networks such as Ethereum, Solana, and Cosmos, zkTLS greatly facilitates cross-chain and multi-chain operations. This is crucial for projects that require seamless integration across multiple chains without central oversight. zkTLS guarantees that data and assets move securely, minimizing risks and eliminating vulnerabilities during transfers, thus promoting greater ecosystem fluidity and interoperability. Moreover, zkTLS can also secure API requests made by decentralized applications (dApps), which often involve interactions with external systems that may expose sensitive information. By enabling only verified data to be shared and keeping sensitive content private, zkTLS helps maintain the integrity and privacy of user interactions and data transmissions across various blockchain applications.

zkTLS can also be applied in various Web2-to-Web2 scenarios, where privacy and trustless verification are paramount. For instance, Nike could use zkTLS to offer a 50% discount to participants of the 2024 Chicago Marathon by verifying their participation without directly collaborating with the marathon organizers. Similarly, Uber Eats could use zkTLS to incentivize DoorDash customers with a free soda on every purchase for users who have completed over 100 orders, without requiring access to DoorDash’s data. In both cases, zkTLS enables privacy-preserving, trustless validation of user eligibility based on external data, fostering seamless collaborations and competitive advantages without sharing sensitive information.

Reinventing web proofs using zkTLS

Traditional TLS transcripts don't allow a third party to detect faulty/altered data, but zkTLS schemes solve this by backing data with proof that it originated from a particular server.

zkTLS allows the addition of a third party in some form, generally referred to as the verifier, who is responsible for checking that the prover reported honest data, as obtained from the server.

Based on their verification model, zkTLS schemes can be classified into:

Proxy-based architecture (proxy-TLS)

TEE-based architecture (TEE-TLS)

MPC-based architecture (MPC-TLS)

Below, we'll go through a generalization of each scheme and highlight significant innovators in the group.

TEE-Based zkTLS

Trusted Execution Environments (TEEs) are specialized, almost tamper-proof sections of modern CPUs designed to perform sensitive computations in isolation within enclaves. TEEs ensure that confidential operations—such as user authentication, data decryption, or cryptographic signing—remain secure even if the rest of the system is compromised. This isolation allows secure computation without exposing sensitive information to service providers or external parties, making them suitable for computational processes where privacy is strictly required.

TEEs have been around for decades in traditional settings and have been proposed for crypto use cases before, but they are just recently starting to gain more attention in the crypto space due to their potential to provide guarantees in various contexts, such as their use by Flasbots.

In TEE-based zkTLS setups, the verifier is a TEE, so the trust assumptions of the system directly depend on the TEE's manufacturer or service provider's reputation and the TEE's resistance to physical or side-channel attacks. TEE-based zkTLS implementations are highly efficient, adding minimal computational and networking overhead. This efficiency makes them attractive for performance-critical use cases, provided that users accept the inherent trust and security risks associated with TEEs.

The foundation of this system was outlined in the research paper Town Crier (2016), and notable examples of teams building on this concept include Clique and Teleport,. Teleport is a consumer-facing implementation that allows users to generate and share unique links enabling others to post as a user on their Twitter account. It is a specialization of the brokered delegation concept co-introduced by Andrew Miller.

In the best-case scenario, the prover passes raw data values obtained from the server to the TEE, which authenticates the message's contents within an enclave. After authentication, the TEE encrypts the message—creating a web-proof—and directly passes it on to the third-party protocol for which the prover requested the proof. This model allows you to prove the authenticity of responses from a website. For instance, you can provide a TEE-generated signature confirming that a proper TLS handshake was performed and that specific requests and responses were genuinely exchanged. If the recipient trusts that the TEE has not been compromised or tampered with, they can confidently accept the validity of the proof without requiring direct verification of the website itself.

As mentioned earlier, TEEs are a relatively underexplored area in crypto, so most of the work in this area is still highly experimental.

MPC-Based zkTLS

Secure Multi-Party Computation (MPC) allows a group of parties, each holding a secret—essentially a subunit of an unrevealed value—to jointly compute an output without revealing their individual inputs. This ensures that no data leakage occurs during the computation. In the context of zkTLS, MPC-based models are designed around the idea that during the TLS handshake process, no symmetric key is generated by a single party. Instead, keys are derived collaboratively, enhancing security by distributing trust.

Implementing MPC-based zkTLS in practice is resource-intensive, as it requires multiple nodes to participate in the computation, which can be expensive and complex to manage. Due to these bottlenecks, practical iterations of TLS protocols commonly implement a special case where there are two computing parties, with one potentially corruptible party. This setup is referred to as Two-Party Computation (2PC).

2PC can be designed in two primary ways:

Garbled-Circuit Protocols: These protocols encode the computation as a boolean circuit using 1-of-2 oblivious transfer. In this setup, the server's response is decrypted within both the prover and the participating 2PC node. More advanced models may employ vector oblivious linear evaluation-based schemes (VOLE-based schemes), which derive the server's write key after the prover commits to the server's final response. This approach decreases processing time and enables selective disclosure of data. It is best suited for bitwise operations.

Threshold Secret Sharing Protocols: These protocols encode the computation as secret key shares distributed between the prover and the 2PC node. This method is particularly effective for arithmetic operations.

In MPC-TLS setups, the MPC component constrains the prover's ability to communicate directly with the server. Instead, a third party collaborates with the prover to encrypt, decrypt, and authenticate messages via MPC, without revealing the entire message passed from the server to the prover. This means the third party prevents the prover from falsifying data without ever knowing the raw value of the data.

The third party in this setup is often referred to as the notary, a term popularized by TLS-Notary's pioneering work in the area. The notary uses blind signatures to sign commitments to the prover's messages, ensuring that the prover cannot alter the message before it is submitted to a protocol that utilizes the data.

For example, when demonstrating to a friend that a specific response originated from a website, you can provide both the server's response and an attestation from all participating MPC nodes (or the notary), confirming that they collaboratively decrypted the data from the website. Your friend will trust this proof if they believe it is highly improbable for you to have compromised or bribed each of the MPC nodes involved.

The MPC-TLS setup generally follows these steps:

Preprocessing Phase: The prover and notary perform oblivious transfer to prepare for faster proof generation.

Server Authentication: The prover obtains the server's public key and certificate chain to verify the server's authenticity.

Key Generation: The prover and notary generate an ephemeral key shared with the master key, which is a TLS-shared secret. These keys are used for message encryption and server authentication.

Request Encryption: When the prover wishes to send a request to the server, they employ 2PC alongside the notary to encrypt and authenticate the request. The notary then blind-signs the resultant message before the prover sends it to the server.

Response Decryption: When the server responds to the prover's request, the prover and the notary use 2PC to decrypt and authenticate the returned message.

Proof Generation: At the end of the session, the prover possesses a certificate chain of the server's identity and notarized transcripts of the session. They use these to generate proofs to be passed along to a verifier, who can verify the correctness of the certificate chain and signatures, and thus verify the correctness of the underlying message.

This design space is the most explored among zkTLS models, with practical implementations like TLSNotary (TLSN) and Decentralized Oracle (DECO) leading the way.

DECO, first introduced by Chainlink, is a three-phase protocol that proceeds as follows:

Three-Party Handshake Phase: The prover, verifier, and server work together to establish secret-shared session keys for encryption and decryption functions. The prover and verifier effectively collaborate to fulfill the role of the "client" in traditional TLS settings.

Query Execution Phase: The prover sends their request to the server with assistance from the verifier, due to their keys being secret-shared.

Proof Generation Phase: The prover decides how and to what extent they'd like to exhibit their request to third parties. DECO offers two methods:

Selective Opening: A portion of the generated data is either revealed or redacted, allowing the prover to disclose only the necessary information.

Zero-Knowledge Two-Stage Parsing: The prover confidentially parses the session's data and then proves to the verifier, in zero-knowledge, that there are constraints on a specific substring.

In summary, MPC-based zkTLS models leverage the principles of secure multi-party computation to enhance the security and privacy of TLS communications. By distributing trust among multiple parties and employing advanced cryptographic techniques, these models aim to provide robust proofs of authenticity without compromising sensitive data.

Proxy-Based zkTLS

The proxy model of zkTLS is the simplest iteration, employing a middleman known as the proxy to facilitate communication between the prover (browser) and the server (website). Instead of sending requests directly to the website, the browser sends them via an HTTPS proxy. The website responds to the browser through this proxy by default. As a result, the proxy observes all the encrypted requests and responses exchanged between the browser and the website.

The proxy provides an attestation to the encrypted requests and responses, along with information indicating whether each piece of data is a request or a response—essentially attesting to whether the encrypted data was sent by the browser or the website. The browser then creates a zero-knowledge proof (zkProof) of the decryption of the response. This zkProof is equivalent to saying, "I know a shared key that decrypts this encrypted data, and here is the decryption, but I will not tell you the shared key itself." This relies on the impracticality of generating a new key that decrypts the data to anything other than gibberish. Therefore, demonstrating the ability to decrypt the data is sufficient; the actual knowledge of the key is unnecessary. Revealing the key itself would compromise all other messages sent earlier, including sensitive information like usernames and passwords.

For example, when proving to a friend that a certain response came from a website, you can say, "Here's the encrypted data, and here's proof from the proxy that it was sent by the website, and here's the zkProof that I have the shared key that decrypts it, and here is that decryption." Your friend will trust this proof if they believe it is unlikely that you have bribed the proxy to falsely attest that certain encrypted data came from the website when it actually came from you. This approach is efficient in both computational and networking resources, as long as you are willing to accept the risk of a very hard-to-execute attack that requires physical access to the proxy's machine.

In this scheme, the proxy observes and stores the encrypted data passed in both directions in the communication channel established between the prover and the server. At the end of a TLS session, the prover sends the proxy the session's certificate chain—which the proxy uses to deduce the server's identity—and the symmetric encryption key employed during the session, enabling the proxy to decrypt the communicated data. The proxy then attests to the server's identity using the certificate chain, decrypts the TLS transcript using the prover's symmetric key, and signs the decrypted data with its own key. The proxy-signed transcript can then be passed to a third-party protocol, which is assured of the data's authenticity and provenance due to the proxy's signature.

However, this scheme introduces significant trust assumptions. The proxy becomes an enshrined trusted party whose role can impede the privacy of the prover's data and bottleneck network performance due to the extra computational load. A malicious or compromised proxy could collude with the prover to falsify data. Proxy systems, while computationally simpler and faster, come with heightened security risks due to the difficulty of securing the computation. This means that even though proofs can be generated faster, their integrity remains questionable.

Notable efforts in this area include the Reclaim protocol, which is making strides with their verifiers referred to as attestors.

ZK-TLS Ecosystem

Applications like zkpass, Reclaim Protocol, opacity network, and zkme use ZK-TLS to enhance privacy and security. zkpass enables secure, privacy-preserving data verification, while Reclaim Protocol leverages zkTLS for data verification. zkme empowers users to control and verify their data without exposing it, showcasing zkTLS's ability to prioritize security and privacy in decentralized systems.

zkPass

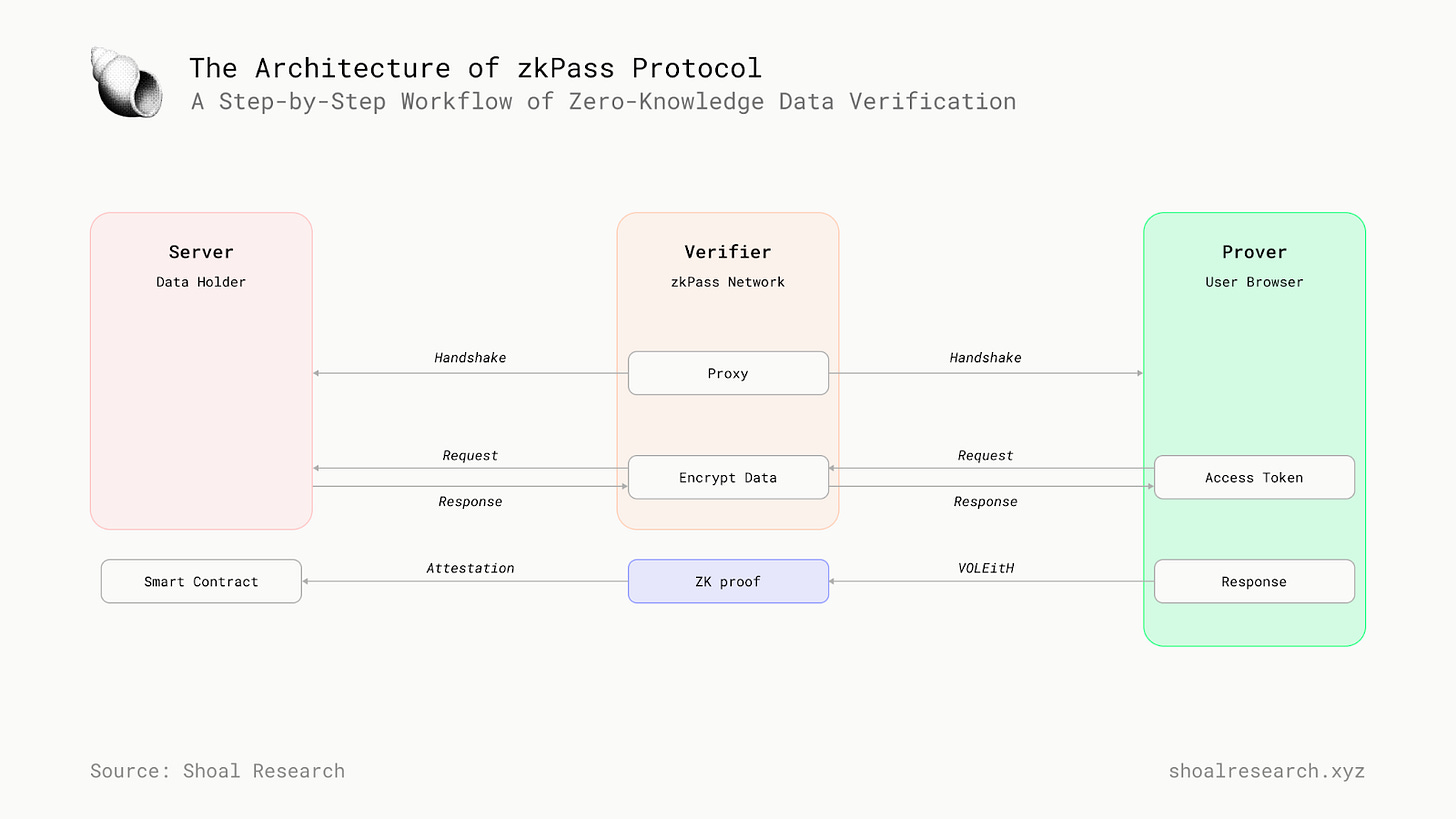

zkPass is an private oracle protocol that enables private internet data to be verifiable on-chain. Built on top of zkTLS, which is composed of 3P-TLS and Hybrid ZK technologies, zkPass provides tools and applications for secure, verifiable data sharing with privacy and integrity assurances from any HTTPS website without requiring OAuth APIs.

zkPass allows users to selectively prove various types of data, such as legal identity, financial records, healthcare information, social interactions, and certifications. These zero-knowledge proof computations are performed locally and securely, ensuring that sensitive personal data is not leaked or uploaded to third parties. They can be used for AI, DePIN, DID, lending, and other financial and non-financial applications. Wherever there is a need for trust and privacy, zkPass can be a solution. zkPass proposes a new paradigm for the traditional data validation and confirmation process, where the verifier is positioned between the prover and the data source. The prover uses the verifier as a proxy, utilizing its access token to retrieve data from the data source. Subsequently, by using the VOLEitH technique, a publicly verifiable proof is generated locally and sent on-chain. This process ensures the verifier remains unaware of the prover's personal information. zkPass introduces 3P-TLS, ZK, and VOLEitH technologies to implement its new paradigm of data validation and confirmation.

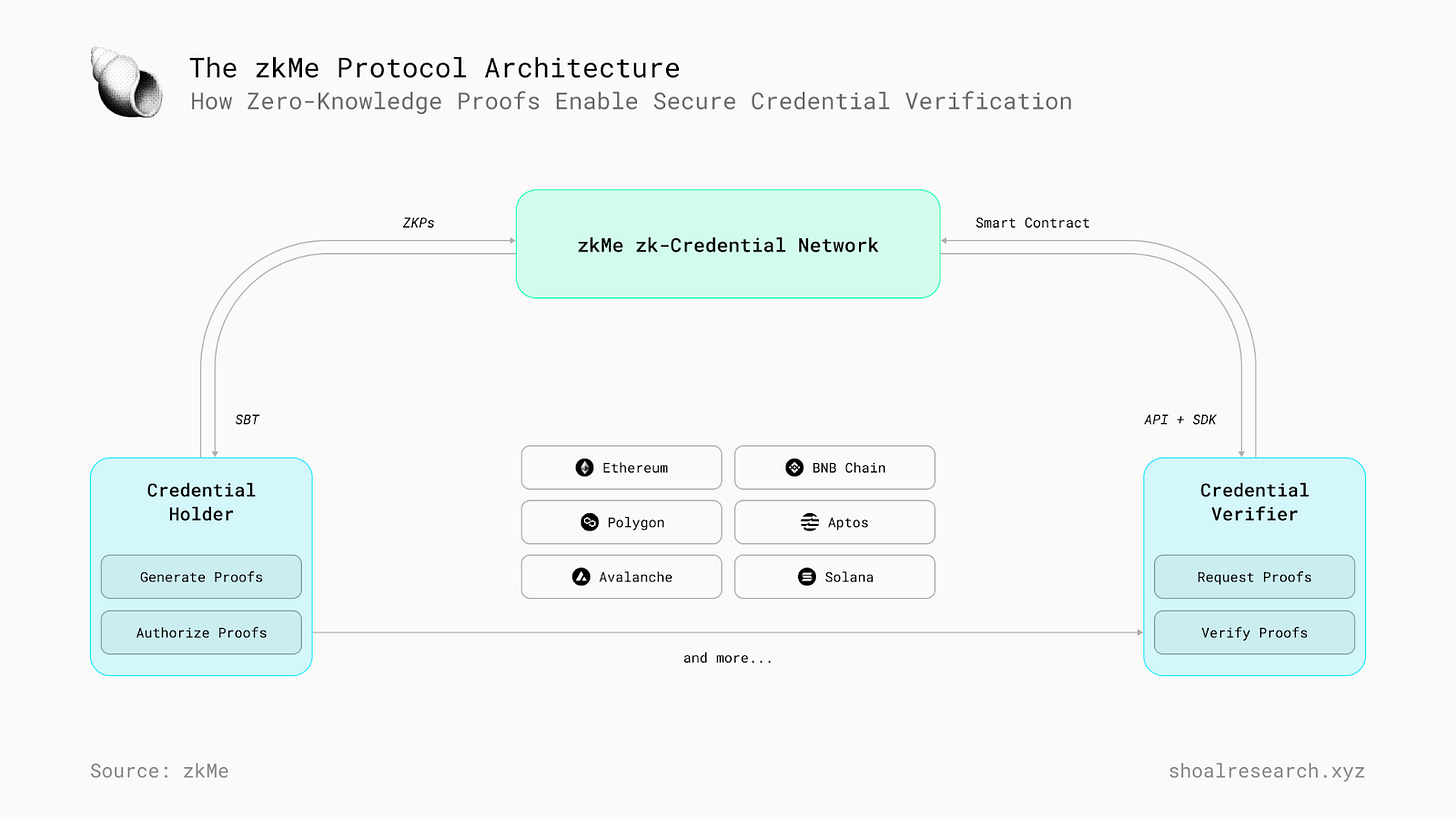

Zkme

As Web3 continues to evolve, the need for secure and private identification methods becomes increasingly important. One compelling example is the use of decentralized identity (DID) systems. Traditional centralized identity systems store personal data on a single server, making them vulnerable to hacking and unauthorized access. As mentioned previously, zero-knowledge proof technology enables users to verify their identity without disclosing sensitive information. While there are various approaches to this technology, zkMe offers a unique solution that ensures privacy and security for Web3 users. zkMe, an implementation of a credential network protocol that enhances and provides secure and private identification in the Web3 ecosystem. It utilizes zero-knowledge proofs (ZKPs) to enable users to authenticate their identity without revealing any sensitive information.

zkMe can be integrated into various web3 applications, such as decentralized exchanges, voting systems, and decentralized social networks, to provide users with secure and private identification. In addition to its security and privacy benefits, zkMe also offers the convenience of a universal login system. Users can use their zkMe DID to log in to multiple applications without having to create a new account for each one.

To ensure the security and privacy of its users, zkMe employs several mechanisms. One of the most important is zero-knowledge proof technology, which allows users to prove the validity of a statement without revealing any additional information beyond what is necessary, as elaborated previously. In addition to zero-knowledge proof technology, zkMe employs multi-party computation (MPC) to ensure that no single entity holds all of the information needed to identify a user. MPC involves multiple parties working together to compute a result without any one party knowing the inputs of the others. To illustrate this, let’s take an example. Suppose Alice wants to create a decentralized identity (DID) on a blockchain network using zkMe. She provides her personal information, such as her name, date of birth, and government-issued ID number, to zkMe.

zkMe then uses MPC to distribute this information across a network of nodes so that no single entity holds all of Alice’s information. Each node only holds a part of her information and collaborate to compute the final result, which is Alice’s DID. This approach guarantees that no single entity can access all of Alice’s information, protecting her privacy and reducing the risk of data breaches. Furthermore, zkMe’s zero-knowledge proof technology enables Alice to prove her identity without revealing any personally identifiable information or sensitive data, which further enhances her security and privacy.

Finally, zkMe utilizes a decentralized infrastructure to further enhance security and privacy. Rather than relying on a central authority to manage user data, zkMe’s infrastructure is distributed across a network of nodes, making it more resilient to attacks and reducing the risk of a single point of failure.

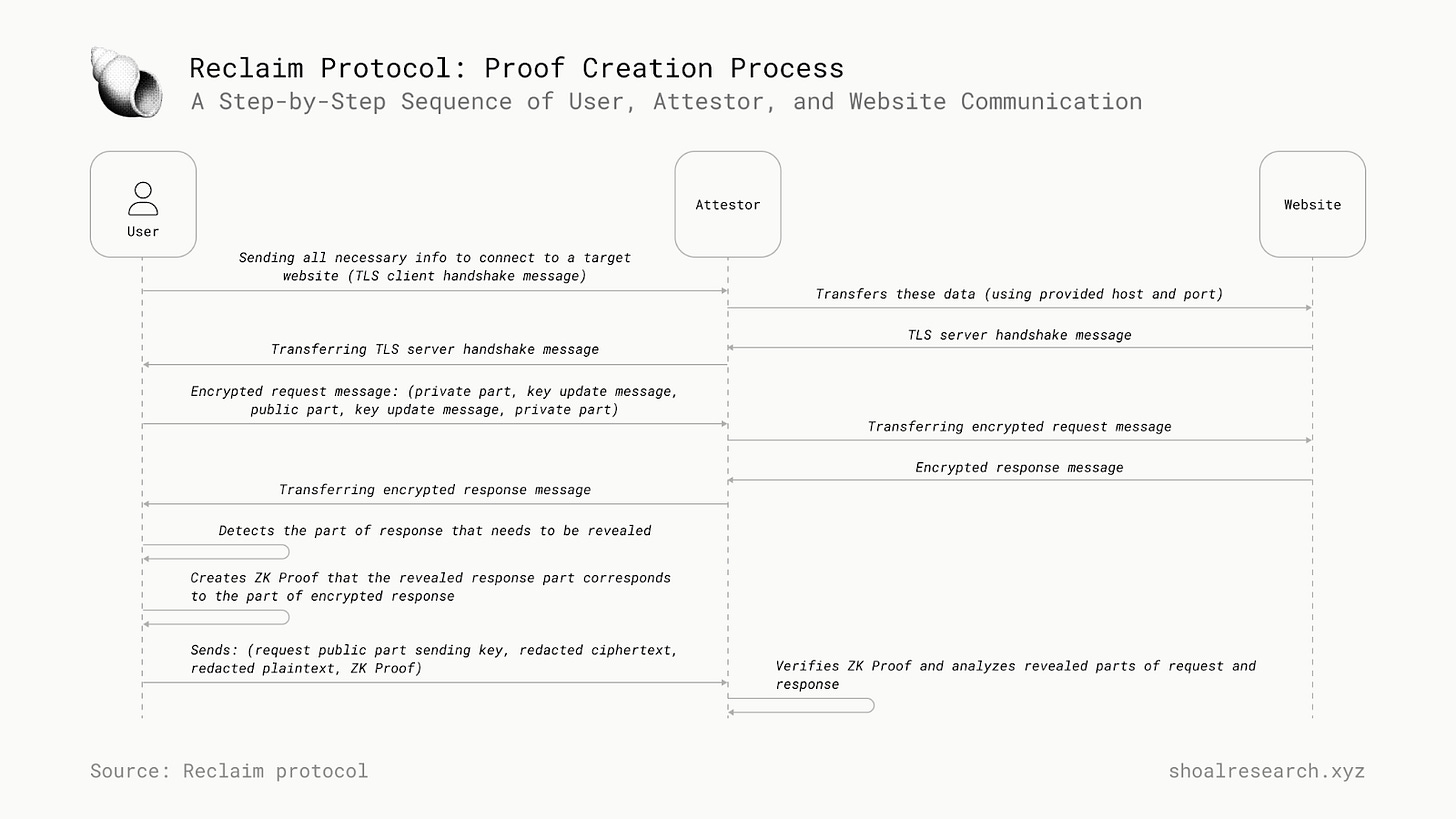

Reclaim Protocol

Reclaim Protocol unlocks unlimited possibilities by making HTTPS verifiable with zero knowledge. This is quite difficult to prove if zero knowledge is not involved; for instance, if you want to prove to me that you have more than a million dollars in your bank account, how would you do it? One option is you send me a screenshot. However, I cannot be sure that you didn’t morph the screenshot. The other option is for you to send me your username and password and let me log in and check your balance myself. This option assumes that you trust me to not drain your bank account. How can you send some information to a stranger on the internet where trust assumptions fail? That’s what Reclaim Protocol solves for.

Reclaim Protocol generates a zk proof for a TLS session data exchange. When you open an HTTPS website, your browser conducts a ceremony with the server called the TLS Handshake. This handshake involves the passing around of public keys and certificates. These certificates are linked to domain names using a certificate authority.

How does the Reclaim protocol work?

In order to create a proof of a claim, a user must first log in to the desired website that will be sending the data. Once logged in, the user must then navigate to the specific desired webpage. The HTTPS request to open the website and the response are routed through a HTTPS proxy server called an attestor. This attestor can only see the encrypted data, not the plaintext. The attestor monitors the encrypted packets transferred between the user and the website. The user shares a fraction of the keys with the attestor that are used by the attestor to verify the user indeed owns the credential (by decrypting the traffic), and in turn the attestor produces a signature, attesting to this fact. This signature of the Attestor is the proof, which can then be shared to any third-party

To verify that certain data being shared across a web page is accurate and to ensure privacy, the Reclaim protocol introduces an HTTP proxy to witness the handshake and data transfer. Any webpage you open goes through a randomly selected HTTP Proxy Witness. HTTP Proxies are a common infrastructure on the internet. The HTTP Proxy Witness checks that (1) the handshake was done with the correct domain name, (2) the correct webpage was opened in the request, and (3) an encrypted response was received. The HTTP Proxy Witness can see only the encrypted response, and it cannot view the contents of the response. This encrypted response is then fed to a zk circuit that runs completely on the client side. The AES/ChaCha20 decryption happens inside the zk circuit, and a search is performed on the decrypted HTML page.

Opacity Network

Opacity Network is a cutting-edge data verification platform that utilizes blockchain technology to secure and verify data transactions while maintaining user privacy. By employing zero-knowledge proofs, Opacity Network enables users to share and verify information without revealing sensitive details. At the core of Opacity Network's functionality are zero-knowledge proofs, which allow users to prove the validity of their data without disclosing the data itself. This method is particularly important in a digital landscape where data privacy concerns are paramount. By ensuring that sensitive information remains confidential, Opacity Network offers a reliable solution for verifying data authenticity across various platforms.

Opacity Network integrated the mainnet AVS on the EigenLayer platform, enhancing its data verification capabilities. This integration utilizes a secure Multi-Party Computation (MPC) network, allowing users to generate data proofs on existing Web2 platforms. This advancement not only improves usability but also strengthens user data sovereignty by allowing control over personal data while interacting with various applications.

Clique

Clique is pioneering a new paradigm for building dApps and consumer applications with its’s TEE coprocessor, which acts as an off-chain AWS Lambda system. It offers confidentiality and verifiability for on-chain applications while being highly versatile, cost-effective, and secure.

The coprocessor architecture is built on a network of TEE nodes supporting custom bytecode execution for VMs (currently EVM and WASM, with more to come). It also provides SDKs enabling clients to build custom executors in TEEs, make smart contract calls to the coprocessor network, and verify attestations and trusted signatures on-chain. Orchestration nodes within enclaves create compute graphs, distribute tasks, and aggregate proofs. We currently focus on Intel SGX, with plans to support AMD SEV-SNP, Intel TDX, and NVIDIA H100 soon, enhancing trust assumptions and efficiency.

This technology ecosystem enables secure, efficient, and verifiable off-chain computation using TEEs for data integrity and confidentiality, with HSMs protecting validator keys. It supports TLS calls for external data, verifiable AI outputs, and user data attestation for incentives. The system facilitates arbitrary computation, HTTPS endpoints with data privacy, and use cases like MEV strategies, automation bots, and decentralized applications (e.g., loyalty programs, gaming, DeFi). It also ensures coordination between untrusted parties and secures blockchain components such as wallets, bridges, and oracles.

Primus

Primus is building a privacy-preserving data infrastructure by combining zkTLS and zkFHE to bridge Web2 and Web3 while enabling verifiable AI. The goal is to make offchain data useful onchain without exposing sensitive information or relying on centralized gatekeepers.

Primus’s core innovation lies in its hybrid cryptographic stack, leveraging:

zkTLS: Extends TLS by embedding zero-knowledge proofs (ZKPs), ensuring data integrity and authenticity while maintaining end-to-end encryption.

Allows selective disclosure of offchain data.

Uses QuickSilver, a high-performance ZKP system that makes zkTLS 10x faster than alternatives like DECO.

Ideal for onchain credit scoring, identity verification, and AI-powered analytics.

zkFHE (Zero-Knowledge Fully Homomorphic Encryption): Enables computations on encrypted data without decryption.

340x faster proving time compared to existing zkFHE models.

Supports privacy-preserving AI, federated learning, and decentralized financial modeling.

Allows trustless AI agents to process encrypted datasets in Web3 ecosystems.

How It Works

Data Verification: zkTLS allows users to prove real-world facts (e.g., financial history, social credentials) onchain without exposing private details.

Secure AI Processing: zkFHE lets AI models compute over encrypted data, unlocking confidential analytics and autonomous AI agents for decentralized ecosystems.

Web2-Web3 Interoperability: Primus bridges offchain data with onchain protocols, enabling privacy-preserving integrations for finance, identity, and AI-driven applications.

The two primary models commonly used for zkTLS are the MPC model and the Proxy model. Each model presents its own trade-offs in terms of security and performance. Primus addresses these differences by providing unified APIs, allowing developers to choose the most suitable model based on the specific requirements of their applications.

zkTLS Ecosystem Map

zkTLS is shaping the next generation of verifiable, privacy-preserving TLS attestations, allowing users to prove statements about their data without exposing underlying details. Early research in TLS oracles tackled the challenge of securely verifying TLS data without modifying Web2 servers. TLSNotary (TLSN) and Decentralized Oracle (DECO) pioneered cryptographic proofs for TLS 1.2 sessions, while later approaches, like DIDO and DiStefano, extended these methods to TLS 1.3. Their models rely on secure multi-party computation (2PC) to ensure data authenticity while preventing tampering. Crunch took a different approach by shifting computational overhead from MPC to zk-Risc0 proofs, reducing costs and making TLS oracles more scalable. These protocols establish the foundation for seamless Web2-to-Web3 interoperability, enabling applications where off-chain data can be securely used onchain.

Beyond direct TLS verification, zkTLS projects are integrating with attestation registries and cryptographic middleware to enhance data portability. Verax, EAS, BAS, and Sign Protocol allow TLS attestations to be stored, bridged, and used across multiple blockchains. Primus is advancing zkTLS by combining it with zkFHE, enabling privacy-preserving AI models that can compute over encrypted data. Other projects like zkMe are applying zkTLS to decentralized identity (DID) and authentication, while zkon.xyz explores Web3-native integrations. These efforts push the boundaries of trusted Web2-Web3 interactions, creating more efficient, scalable, and privacy-focused solutions for integrating off-chain data into decentralized applications.

General applications of zkTLS

So far we've focused on how to efficiently export data and produce web proofs; now it's time for the why. Why would anyone want this? Why risk the wrath of litigious behemoths for marginal improvements to UX? Is there no better way to do this?

Simply, if data is the next oil, as they say, then you should probably be in charge of yours, and probably so too. Allowing the aggregation of user data by the user isn't unprecedented; crawlers have served this purpose for traditional banking, aggregators serve this purpose for crypto markets, and account abstraction models will eventually serve this purpose for crypto users and identities.

Web proofs generated via zkTLS schemes allow users to aggregate their data and be in charge of it, and to use it as they see fit eventually.

There will probably be better ways to do this eventually, but we mustn't let perfect be an enemy to better. zkTLS enables more permissionlessly, so it is the better alternative to user empowerment for the near- to mid-term future.

There are already performant instances of applications enabled by zkTLS, such as Teleport, zkP2P, zkPass, zkTix, and numerous zkKYC platforms. DeFi applications will also undoubtedly see more use for the technology as time goes on, especially as undercollateralized lending catches on.

Wrapping Up

The advent of zkTLS has the potential to revolutionize digital communications by addressing significant flaws in current data-sharing and verification systems. Traditional TLS ensures secure data transmission but falls short in providing independent verification or facilitating data portability. zkTLS, by incorporating zero-knowledge proofs, overcomes these limitations, allowing users to prove data authenticity without disclosing sensitive details.

This unique capability empowers users to export and share their data seamlessly, promoting greater autonomy and privacy. The system's flexibility, demonstrated through its proxy, TEE, and MPC implementations, opens new possibilities for cross-environment data utilization—from verifying marathon participation for discounts to incentivizing customer loyalty across competing platforms. By enabling trustless verification and privacy-centric data sharing, zkTLS supports a future where the internet is both more open and aligned with the rights and needs of individuals, paving the way for a more interconnected and user-empowered digital world.

Resources

Hindi, R. [@randhindi]. (2024, March 29). [Tweet]. X. https://x.com/randhindi/status/1836329769597600232

Elitzer, D. [@delitzer]. (2024, March 21). [Tweet]. X. https://x.com/delitzer/status/1828833053000839578

Artichmaro [@artichmaro]. (2024, March 29). [Tweet]. X. https://x.com/artichmaro/status/1836311271769079979

Weidai [@_weidai]. (2024, March 21). [Tweet]. X. https://x.com/_weidai/status/1829194060554522915

Matetic, S., Schneider, M., Miller, A., Juels, A., & Capkun, S. (2018). DelegaTEE: Brokered delegation using trusted execution environments. In Proceedings of the 27th USENIX Security Symposium (pp. 1387–1403). USENIX Association. https://www.usenix.org/conference/usenixsecurity18/presentation/matetic

zkMe. (n.d.). Solution overview. Retrieved December 3, 2024, from https://docs.zk.me/zkme-dochub/getting-started/solution-overview

zkPass. (n.d.). zkPass documentation. Retrieved December 3, 2024, from https://zkpass.gitbook.io/zkpass

Chainlink Labs. (n.d.). Chainlink documentation. Retrieved December 3, 2024, from https://docs.chain.link/

Reclaim Protocol. (n.d.). Reclaim Protocol SDKs. Retrieved December 3, 2024, from https://docs.reclaimprotocol.org/

Not financial or tax advice. The purpose of this post is purely educational and should not be considered as investment advice, legal advice, a request to buy or sell any assets, or a suggestion to make any financial decisions. It is not a substitute for tax advice. Please consult with your accountant and conduct your own research.

Disclosures. All posts are the author's own, not the views of their employer. This post has been sponsored by zkPass team. At Shoal Research, we aim to ensure all content is objective and independent. Our internal review processes uphold the highest standards of integrity, and all potential conflicts of interest are disclosed and rigorously managed to maintain the credibility and impartiality of our research.